As a commercially license application, running Gaussian09 at the CUNY HPC Center comes with some restrictions

A SLURM script must be used in order to run a Gaussian09 job. HPCC main cluster Penzias supports Gaussian jobs demanding up to 64GB of memory. Any job necessitating more than 24GB but less than 64GB must be submitted via partition 'partgaularge' and can utilize up to 16 cores. All other jobs can utilize up to 8 cores and must be submitted via partition 'production_gau'. The users should place job(s) according to their simulation requirement.

Gaussian 16 Rev C.01 Has Been Released: The latest version of Gaussian 16 has been released. Read the release notes here. Last updated on: 05 January 2017. Basis Sets; Density Functional (DFT) Methods; Solvents List SCRF.

Gaussian Scratch File Storage Space

If a single Gaussian job is using all the cores on a particular node (this is often the case) then that node's entire local scratch space is available to that job, assuming files from previous jobs have been cleaned up. In order to use Gaussian scratch space, the users must not edit their SLURM scripts in order to place Gaussian scratch files anywhere other than the directories used in the recommended scripts. In particular, users MUST NOT place their scratch files in their home directories. The home directory is backed up to tape and backing up large integrals files to tape will unnecessarily waste backup tapes and increase backup time-to-completion.

Users are encouraged to ensure that their scratch file data is removed after each completed Gaussian run. The example SLURM script below for submitting Gaussian jobs includes a final line to remove scratch files, but this is not always successful. You may have to manually remove your scratch files. The examples script prints out both the node where the job was run and the unique name of each Gaussian job's scratch directory. Please police your own use of Gaussian scratch space by going to '/scratch/gaussian/g09_scr' and looking for directories that begin with your name and the date that the directory was created. As a good rule of thumb, you can request 100 GBs per core in the SLURM script, although this is NOT guaranteed to be enough. Finally, Gaussian users should note that Gaussian scratch files are NOT backed up. Users are encouraged to save their checkpoint files in their SLURM 'working directories' and copy them on '/global/u/username' if they will be needed for future work as they are temporary. From this location, they will be backed up.

NOTE: If other users have failed to clean up after themselves, and you request the maximum amount of Gaussian scratch space, it may not be available and your job may sit in the queue.

Gaussian SLURM Job Submission

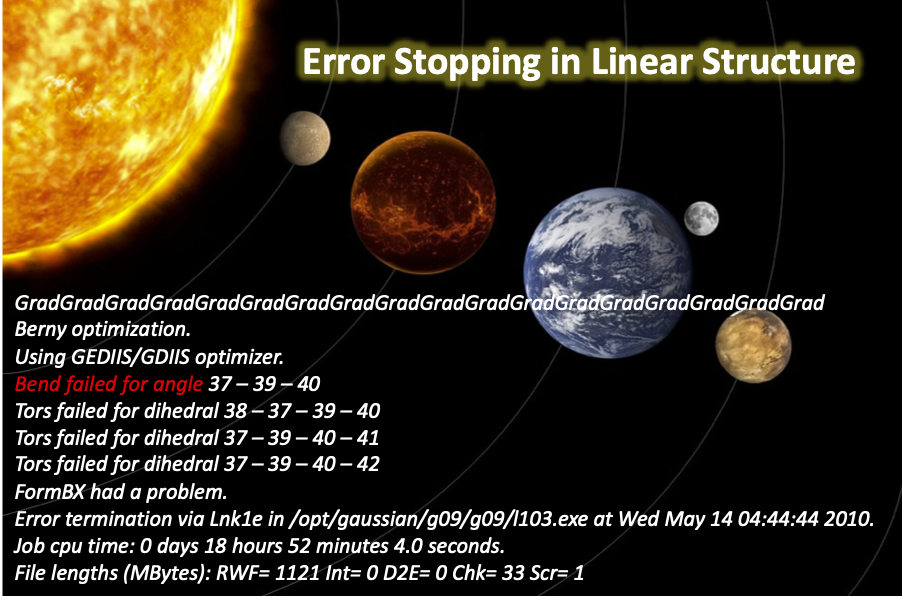

As noted, Gaussian parallel jobs are limited by the number of cores on a single compute node. Eight (8) is the maximum processor (core) count on small nodes and the memory per core is limited to 2880 MB/core. On large nodes the maximum processor cores is 16 and the memory per core is 3688MB/core. Here, we provide a simple Gaussian input file (a Hartree-Fock geometry optimization of methane), and the companion SLURM batch submit script that would allocate 4 cores on a single compute node and 400 GBytes of compute-node-local storage.

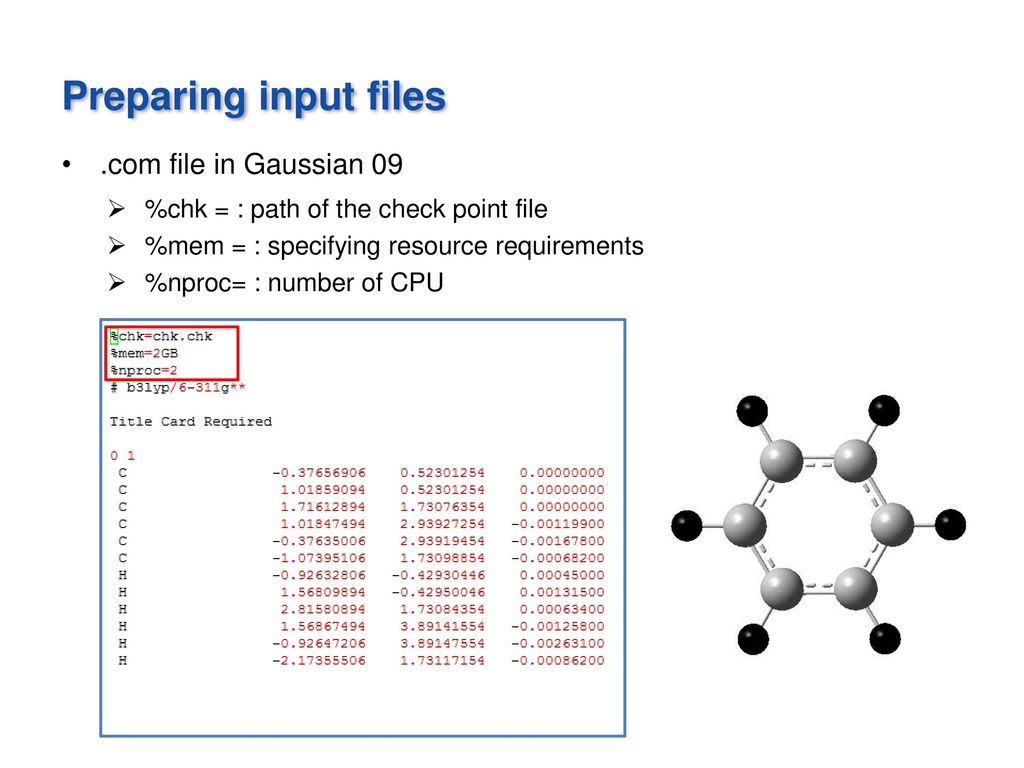

The Gaussian 09 methane input deck is:

Notice that we have explicitly requested 8 GBytes of memory with the '%mem=8GB' directive. The input file also instructs Gaussian to use 4 processors which will ensure that all of Gaussian's parallel executables (i.e. links) will run in SMP mode with 4 cores. For this simple methane geometry optimization, requesting these resources (both here and in the SLURM script) is a bit extravagant, but both the input file and script can be adapted to other more substantial molecular systems running more accurate calculations. User's can make pro-rated adjustments to the resources requested in BOTH the Gaussian input deck and SLURM submit script to run jobs on 2, 4, or 8 cores.

Here is the Gaussian SLURM script named g09.job which is intended to be used on G09:

To run the job, one must use the standard SLURM job submission command as follows:

Users may choose to run jobs with fewer processors (cores, cpus) and smaller storage space requests than this sample job. This includes one processor jobsand others using a fraction of a compute node (2 processors, 4 processors, 6 processors). On a busy system, these smaller jobs may start sooner that thoserequesting a full 8 processors packed on a single node. Selecting the most efficient combination of processors, memory, and storage will ensure that resourceswill not be wasted and will be available to allocate to the next job submitted.

For large jobs (up to 64GB) the users may use the following script named g09_large.job:

To run the job, one must use the the following SLURM job submission command:

CHPC Software: Gaussian 09

NOTE-- Gaussian09 has been replaced by Gaussian 16 in early 2017

Gaussian 09 is the newest version of the Gaussian quantum chemistry package, replacing Gaussian 03.

- Current revision: D.01

- Machines: All clusters

- Location of latest revision:

- lonepeak : /uufs/chpc.utah.edu/sys/pkg/gaussian09/EM64TL

- ember, ash, kingspeak: /uufs/chpc.utah.edu/sys/pkg/gaussian09/EM64T

Please direct questions regarding Gaussian to the Gaussian developers. The home page for Gaussian is http://www.gaussian.com/. A user's guide and a programmer's reference manual are available from Gaussian and the user's guide is also available online at the Gaussian web site.

IMPORTANT NOTE: The licensing agreement with Gaussian allows for the use of this program ONLY for academic research purposes and only for research done in association with the University of Utah. NO commercial development or application in software being developed for commercial release is permitted. NO use of this program to compare the performance of Gaussian 09 with their competitors' products (i.e. Q-Chem, Schrodinger, etc) is allowed. The source code cannot be used or accessed by any individual involved in the development of computational algorithms that may compete with those of Gaussian Inc. If you have any questions concerning this, please contact Anita Orendt at anita.orendt@utah.edu.

In addition, in order to use Gaussian09 you must be in the gaussian users group.

Parallel Gaussian:

The general rule is that SCF/DFT/MP2 energies and optimizations as well as SCF/DFT frequencies scale well when run on multiple nodes. In the case of DFT jobs, the issue with larger cases (more than 60 unique, non-symmetry related atoms) not running parallel has been resolved. You no longer need to use Int=FMMNAtoms=n to turn off the use of the FMM, where n is the number of atoms in order to run these cases in a parallel fashion.

To run non-restartable serial jobs that will take longer than the queue time limits, you can request access to the long queue on a case by case basis in order to be able to complete these jobs.

As always, when running a new type of job, it is best that you first test the parallel nature of the runs by running a test job on both 1 and 2 nodes and looking for the timing differences before submitting a job that uses 4, 8 or even more nodes. The timing differences are easiest to see if you use the p printing option (replace the # at the beginning of the keyword line in the input file with a #p)

To Use:

Batch Script

Gaussian 09 Manual Pdf

Please note that it is important that you use scratch space for file storage during the job. The files created by the program are very large.

When determining a value for the %mem variable, please allow at least 64 MB of the total available memory on the nodes you will be using for the operating system. Otherwise, your job will have problems, possibly die, and in some cases cause the node to go down. Ember and ash nodes have 24GB, and Kingspeak 64GB; lonepeak nodes have either 96 or 256GB if more memory is needed.

There are two levels of parallelization in Gaussian: shared memory and distributed. As all of our compute nodes have multiple cores per node, you will ALWAYS set %nprocs to the number of cores per node. This number should agree with the ppn value set in the PBS -l line in the batch script. The method to specify the use of multiple nodes has changed in G09. The %nprocl parameter is no longer used. Instead, in the PBS script a file containing the list of nodes in the proper format called Default.Route is created. This change has also caused a limitation in the number of nodes that can be used (at least for now). This limit is based on a line length limit, and is between 8-12 nodes, depending on the length of the cluster name.

In order for to successfully run G09 or GV jobs interactively you need to to be using the gaussian09 environmental variables as set in the .tcshrc script provided by CHPC (need to uncomment line source /uufs/chpc.utah.edu/sys/pkg/gaussian09/EM64T/etc/g09.csh in each cluster section). Therefore you must have this file in your home directory. You will need this even if your normal shell is bash, as Gaussian requires a csh to run. Alternately, if you use modules you would need to set via module load gaussian09.

An example SLURM script may be obtained form the directory/uufs/chpc.utah.edu/sys/pkg/gaussian09/etc/ . The file to use is g09-module.slurm. If you are not using modules yet, please consider changing to do so; if not come talk to Anita for assistance.

Please read the comments in the script to learn the settings and make sure settings of these variables agree with settings in the % section of the com file and in the SLURM directives in the batch script.

Gaussian 09 Basis Sets

Example of parallel scaling using G09

These timings can be compared with those given on the G03 page

The job is a DFT (B3PW91) opt job with 650 basis functions taking 8 optimization cycles to converge.

| Number of Nodes | Number of Processors | walltime to complete job |

|---|---|---|

Kingspeak | ||

| 1 | 16 | 1.25 hrs |

| 2 | 32 | 0.75 hours |

Ember | ||

1 | 12 | 3.25 hrs |

2 | 24 | 1.75 hrs |

Updraft (Retired) | ||

1 | 8 | 4.25 hrs |

2 | 16 | 2.25 hrs |

4 | 32 | 1.25 hrs |

Sanddunearch (Retired) | ||

1 | 4 | 12.75 hrs |

2 | 8 | 7.0 hrs |

4 | 16 | 3.5 hrs |

8 | 32 | 2 hrs |

The DFT frequency of the above case:

Gaussian 09 Manual

| Number of Nodes | Complete wall time |

|---|---|

| Kingspeak | |

| 1 | 1.5 hrs |

| 2 | < 1.0 hrs |

Ember | |

1 | 3.25 hrs |

2 | 1.5 hrs |

Updraft (Retired) | |

1 | 4.25 hrs |

2 | 2.25 hrs |

4 | 1.0 hrs |

Sanddunearch (Retired) | |

1 | 11.5 hrs |

2 | 5.75 hrs |

4 | 3 hrs |

8 | 1.5 hrs |